Developing a virtual reality simulation system for preoperative planning of thoracoscopic thoracic surgery

Introduction

Surgery remains the standard of care for primary malignant lesions of the lung. Conventional thoracotomy has been gradually supplanted by minimally invasive surgery (MIS) over recent years, including video-assisted thoracoscopic surgery (VATS) and robotic-assisted thoracoscopic surgery (RATS), following continued improvements in surgical technologies and techniques. VATS or RATS are being applied to more complex procedures, including anatomic segmentectomy. Lack of stereoscopic vision and/or reduced tactile feedback during MIS may lead to unexpected intraoperative or postoperative complications, such as bleeding, pneumonia, atelectasis, and loss of pulmonary function (1). Therefore, accurate delineation of pulmonary anatomy and obtaining sufficient surgical margins are critical for successful sublobar resection.

Preoperative surgical planning with interactive three-dimensional (3D) computed tomography (CT) reconstruction is a useful method to enhance the surgeon’s knowledge of patient’s anatomy including identification of anatomic variants. Intraoperative 3D navigation is feasible and could contribute to safety and accuracy of anatomic resection (2,3). Imaging software programs that reconstruct two-dimensional (2D) CT data for 3D visualization of pulmonary vessels and bronchi have shown advantages for planning accurate sublobar resection (3,4). However, 3D CT provides only static simulation; dynamic simulation, reflecting the intraoperative deformation of lung, has not been developed. Several recent reports have described the innovative use of virtual reality (VR) simulation as a surgical training tool (5,6).

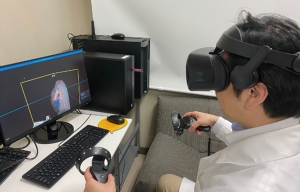

VR navigation with head mounted displays (HMD) is an immersive experience whereby the user's entire field of vision is replaced with a digital image. The images displayed to each eye are varied to create a stereoscopic effect. Advanced volume rendering provides more realistic representations, transforming medical images into a powerful communication tool.

The BananaVision software is a networked, multiuser VR tool for VR HMDs developed by researchers from Colorado State University. This platform allows users to better visualize both human anatomical learning models as well as medical images for the creation of instructional content and clinical evaluation. Further to the educational advantages of VR platforms in general, the networking capabilities allow multiple clinicians to interact in real-time with other specialists inside a common virtual space. These capabilities together provide a more meaningful way to interact with scientific and medical imaging data.

In this study, we aimed to develop a novel VR with HMD simulation system that generates virtual dynamic images based on patient-specific CT data to evaluate its utility for the surgical planning of lung segmentectomy. We present the following article in accordance with the MDAR reporting checklist (available at http://dx.doi.org/10.21037/jtd-20-2197).

Methods

This study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). The study was approved by the Research Ethics Board of Hokkaido University Hospital, Japan (018-0396) as a single-center, phase I, feasibility study and informed consent was taken from all the patients.

Patients underwent preoperative contrast-enhanced CT scan after intravenous administration of iodinated contrast medium (300 mgI/kg) using mechanical injector (Dual Shot GX; Nemoto Kyorindo, Tokyo, Japan) into the upper extremity over 15 seconds followed by a 30 mL saline flush. Contrast-enhanced CT scans were obtained using an Area Detector CT (ADCT; Aquilion ONE; Canon, Tokyo, Japan) and saved as DICOM files on a server client-type workstation (Zio Station 2; AMIN, Tokyo, Japan). The 3D image data were extracted in the DICOM file format from the Zio station 2. DICOM data of the lung, vessels [pulmonary artery (PA) and pulmonary vein (PV)], bronchi, and tumor were separately saved.

The DICOM dataset was imported into Fiji (https://fiji.sc/) for automated centerline detection of the PA, PV, and bronchi. The raw output was then imported into BananaVision using a dedicated macro plugin.

The BananaVision platform was operated on a Hewlett Packard Elite Desk workstation. The workstation was equipped with an Intel i7-8700 CPU, 32GB RAM, NVIDIA RTX2080 GPU, and Windows 10 Pro. The HMD used was Samsung Odyssey+. The BananaVision platform renders case-specific virtual models following importation of the processed CT data output.

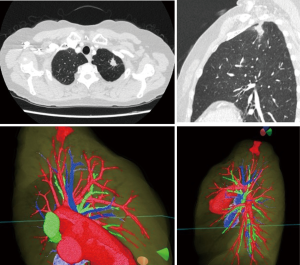

Preoperative review of the resection plan was performed using the patient-specific 3D images. The original preoperative chest CT scan demonstrated a left upper lobe tumor; left upper lingula-sparing segmentectomy was planned. Representative images of the 3D model demonstrate the relationship between the tumor and pulmonary anatomy (Figure 1). The surgeon wears the HMD to display the virtual reality image. A hand device allows the surgeon to interact with the virtual objects. In preparing for VATS sublobar resection, critical segmental vessels and bronchi associated with the target segment were identified (Figure 2) (Video 1).

Results

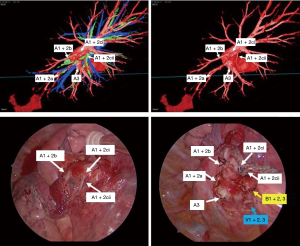

Operations were performed with the patient in lateral decubitus position under general anesthesia with one-lung ventilation. Two ports and a utility incision were made without rib spreading. The operation was performed entirely via thoracoscopic visualization. Representative images of the 3D model demonstrate high-resolution anatomical details of sub-subsegmental pulmonary artery branches. Intraoperative VATS images confirm the same vessel orientation as in the 3D model, prior to and after vessel ligation (Figure 3). The lingula-sparing left upper segmentectomy presented here was performed for resection of a 20mm nodular lesion in left segment 1+2. Based on preparation with the VR HMD, we readily identified variant PA vessel anatomy. Intraoperative ICG fluorescence was used to facilitate demarcation of the intersegmental border, as previously described (7). In brief, near infrared thoracoscopy (OPAL1, KARL STORTZ, Germany) was used to guide electrocautery marking of the resected segment following ligation of the relevant PA branches and injection of ICG (3 mg/kg). Lung parenchyma was divided using electrocautery or endoscopic staples along the marked intersegmental border (Video 2).

Discussion

This is the first report to describe preoperative planning prior to anatomic pulmonary resection using an HMD-based VR system. In this preliminary study, we generated patient-specific high-resolution 3D lung models that could be freely manipulated using the BananaVision software platform for display on VR HMDs.

We identified several advantages of the VR HMD setup compared to conventional preoperative imaging review. Preoperative surgical review using 3D reconstructions has already shown great value for thoracic surgery (2-4). In the present study, we relied on the BananaVision platform to obtain VR 3D images for visualization of pulmonary anatomy preoperatively, whereby the surgeon could interact with the reconstruction more easily. Our results suggest an optimal pulmonary resection plan derived from preoperative VR 3D simulation could be directly applied to the operating room, providing surgeons confidence in their VATS approach. Surgeons could view the lung model from any potential orientation relative to the thoracoscope and instruments, facilitating preoperative planning. Anomalous pulmonary vessels could be easily identified, allowing surgeons to plan ahead an approach to managing these structures. Developments in computer sciences and their integration into the medical field have provided new opportunities for preoperative planning prior to complex procedures. This will prove to be a benefit both for the smooth technical performance of complex procedures and improved safety for the patient.

In terms of simulation training, preoperative review of patients’ individual anatomy, tumor size, and tumor location could be beneficial for assessment of surgical resectability during multidisciplinary case discussions. The VR 3D reconstructions can also be important educational tools for trainees. Navigation and review of the 3D models do not require the same interpolation as standard cross-sectional imaging; these models could therefore help trainees review basic anatomy as well as prepare more effectively for complex cases. The multi-user functionality of BananaVision allows both the trainee and attending surgeon to manipulate the same model cooperatively in a shared space, enabling interactive discussions. Simulation-based training can teach technical aspects of procedures and reduce the learning curve, since the surgical trainee can practice technical skills in a highly representative and time-efficient manner to achieve surgical capability (8). With simulation-based training, the trainee can adapt to the variety of procedures and intraoperative complications. Training using VR simulators has been shown to improve performance in the operating room (8).

At this time, BananaVision has been applied as a preoperative simulation tool. However, it may also have utility for real-time intraoperative navigation. If automated registration of endoscopic images to 3D reconstructions was integrated into the BananaVision platform, surgeons could theoretically perform real-time surgical resection with a dynamic BananaVision overlay.

Previously, our group developed an AR thoracoscopic surgical navigation system that accounts for the thoracoscope position to aid in confirmation of tumor location and surgical margin decision-making during MIS (9). This system was composed of a cone beam CT (CBCT), a conventional VATS platform, an optical tracking system, and a custom 3D visualization dashboard that linked these components together (GTx Eyes) (9). The CBCT-based 3D model is displayed from the perspective of the tracked thoracoscope and can be overlaid onto the endoscopic image to permit visualization of otherwise obscured structures. Our future goal is to integrate the previous GTx Eyes platform with the VR HMD system described here. This hypothetical combined system would provide surgeons with improved augmented guidance to enhance surgeon performance during complex thoracoscopic procedures.

An ideal multimodal imaging system would put critical anatomic information right where it is needed in the operative room. This level of integration is only possible though the surgical cockpit in RATS. However, the risk for informational overload must be balanced. It is vital that critical anatomy is displayed to the operator in a manner that is relevant (i.e., appropriately oriented) and timely (i.e., during critical dissection or prior to no-return decisions like stapling of bronchovascular structures). We believe a surgical approach combining a VR HMD system and a robotic platform could limit intraoperative and postoperative complications to improve general surgical performance.

Conclusions

In conclusion, this initial experience demonstrates the feasibility of preoperative planning using VR simulation with HMD. Routine preoperative imaging combined with VR could facilitate preoperative planning to improve the safety and accuracy of anatomic resection. Further studies are warranted to investigate the role of intraoperative VR guidance, especially during complex sublobar resections.

Acknowledgments

We would like to thank Dr. Taku Sugiyama and Dr. Hiroaki Motegi (Department of Neurosurgery, Hokkaido University) and Dr. Peter Y Shane (Central Clinical Facility, Hokkaido University) for their support in developing our VR workflow.

Funding: None.

Footnote

Reporting checklist: The authors have completed the MDAR reporting checklist. Available at http://dx.doi.org/10.21037/jtd-20-2197

Data Sharing Statement: Available at http://dx.doi.org/10.21037/jtd-20-2197

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at http://dx.doi.org/10.21037/jtd-20-2197). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). The study was approved by the Research Ethics Board of Hokkaido University Hospital, Japan (018-0396) as a single-center, phase I, feasibility study and informed consent was taken from all the patients.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Yao F, Wang J, Yao J, et al. Three-dimensional image reconstruction with free open-source OsiriX software in video-assisted thoracoscopic lobectomy and segmentectomy. Int J Surg 2017;39:16-22. [Crossref] [PubMed]

- Sardari Nia P, Olsthoorn JR, Heuts S, et al. Interactive 3D Reconstruction of Pulmonary Anatomy for Preoperative Planning, Virtual Simulation, and Intraoperative Guiding in Video-Assisted Thoracoscopic Lung Surgery. Innovations (Phila) 2019;14:17-26. [Crossref] [PubMed]

- Tokuno J, Chen-Yoshikawa TF, Nakao M, et al. Resection Process Map: A novel dynamic simulation system for pulmonary resection. J Thorac Cardiovasc Surg 2020;159:1130-8. [Crossref] [PubMed]

- Chan EG, Landreneau JR, Schuchert MJ, et al. Preoperative (3-dimensional) computed tomography lung reconstruction before anatomic segmentectomy or lobectomy for stage I non-small cell lung cancer. J Thorac Cardiovasc Surg 2015;150:523-8. [Crossref] [PubMed]

- Solomon B, Bizekis C, Dellis SL, et al. Simulating video-assisted thoracoscopic lobectomy: a virtual reality cognitive task simulation. J Thorac Cardiovasc Surg 2011;141:249-55. [Crossref] [PubMed]

- Jensen K, Bjerrum F, Hansen HJ, et al. A new possibility in thoracoscopic virtual reality simulation training: development and testing of a novel virtual reality simulator for video-assisted thoracoscopic surgery lobectomy. Interact Cardiovasc Thorac Surg 2015;21:420-6. [Crossref] [PubMed]

- Mun M, Nakao M, Matsuura Y, et al. Thoracoscopic segmentectomy for small-sized peripheral lung cancer. J Thorac Dis 2018;10:3738-44. [Crossref] [PubMed]

- Jensen K, Bjerrum F, Hansen HJ, et al. Using virtual reality simulation to assess competence in video-assisted thoracoscopic surgery (VATS) lobectomy. Surg Endosc 2017;31:2520-8. [Crossref] [PubMed]

- Lee CY, Chan H, Ujiie H, et al. Novel Thoracoscopic Navigation System With Augmented Real-Time Image Guidance for Chest Wall Tumors. Ann Thorac Surg 2018;106:1468-75. [Crossref] [PubMed]